In the previous post we looked at how the Azure-consistent storage is being provisioned in a region and what components are involved to make this happen. In this blog post we continue our journey on Azure Stack Storage and look at what storage types are available and how blob and table storage is working in more detail.

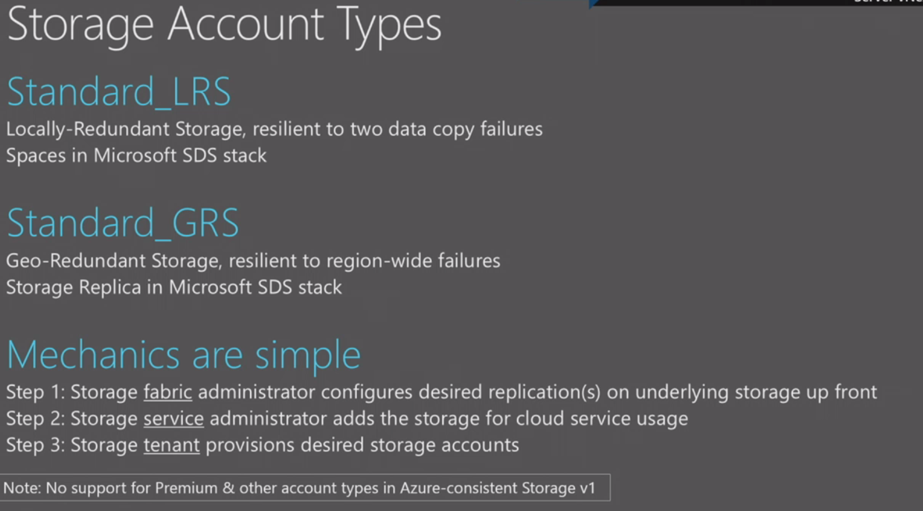

Let’s look at Storage Account Types, you can choose to have

-

Standard_LRS

- Locally-redundant Storage, resilient to two data copy failures

-

Standard_GRS

- Geo-redundant Storage, resilient to region wide failures

The technology used for Standard_LRS is Storage Spaces in the Microsoft Software Defined Storage stack. With Standard_GRS the new feature Storage Replication is used to achieve replications between regions.

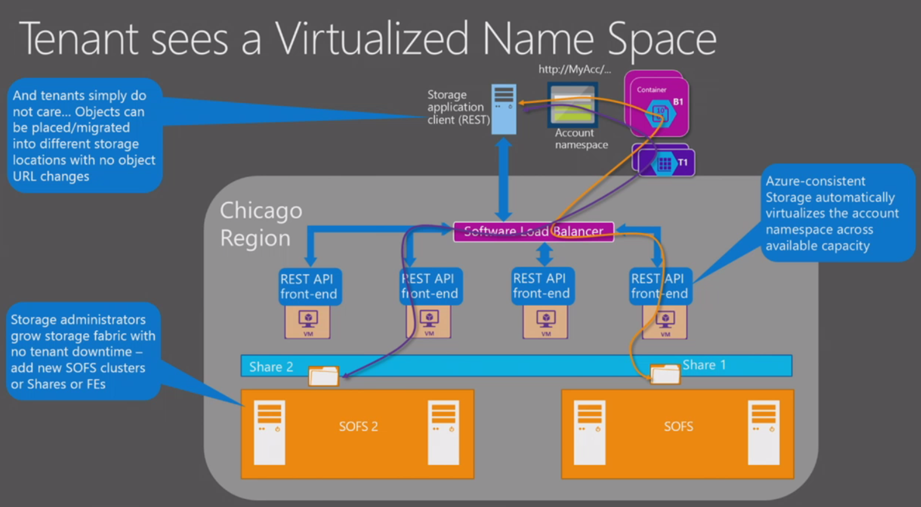

For the tenant it remains 1 virtualized account namespace for example https://MyStorageAcc.Storage.Amsterdam.Azurestack.nl and when a region is failed over it is transparent for the end user. Same when the Storage Service Provider Administrator adds new SOFS clusters. The objects are placed/migrated into different storage locations.

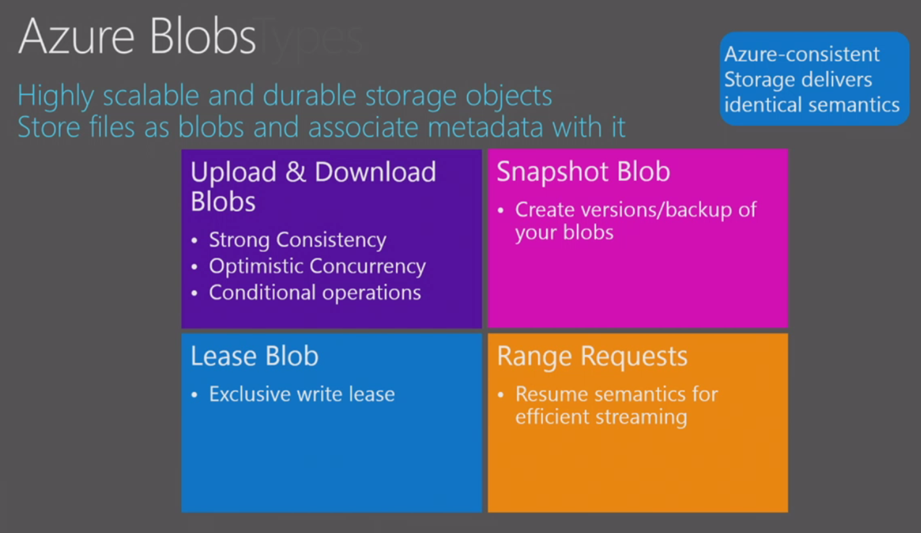

A closer look at Azure Blobs.

Azure blobs are highly scalable. It is storage objects with associated metadata to it. The API capabilities are very rich and with a strong consistency for developing applications that use blob storage. You can upload and download blobs programmatically. You can create a snapshot of blobs and create versions for backup of your blob. With lease blob you can create exclusive write lease on a blob. So it claims ownership and when you have the lease you can be sure no other operation can change the blob at that time. With the range request you can call this function to know exactly where your data is contained in that blob. You could then download only those ranges by specifying them in the range request header in the code. For example, when you are streaming content

If we look at the blob types, there are 2 Azure Blob types. Block Blobs and Page blobs. Both have their own purpose.

-

Block Blobs

- Designed for efficient uploads of large blobs, optimized for streaming workloads, archiving 200GB-max block blob composable from a collection of 4MB-max blocks

-

Page Blobs

- Collection of 512-byte-alligned pages optimized for random, page-aligned read/write operations and they can grow up to 1TB size.

Now let’s dive deeper in the Blob Persistence Design. We have mentioned earlier in the blog series about that the blob back-end is installed on the SOFS nodes in the SOFS cluster. This component keeps Blob Metadata and the Blob Data itself. The Blob metadata is persisted in a metadata store each scoped to a large number of containers on the volume. Then we have the Blob Data itself. All data is resided on ReFS volumes and the persistence approach depends on what type of storage it is. For Block Blobs it is using chunk store (chunk-based design) and for Page Blobs it is using ReFS files (File-based design).

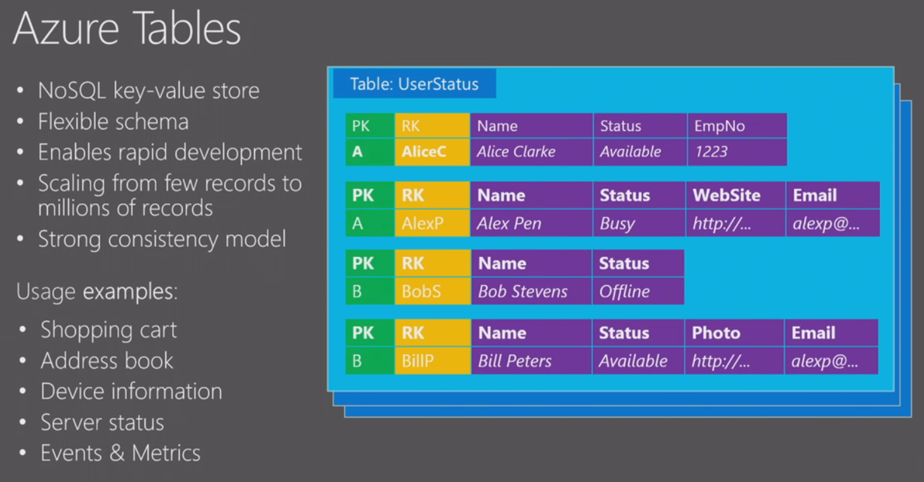

A look at the Azure Table service

Azure Tables is a NoSQL key-value store. It does not require a schema like in SQL that contains columns and data types (structured data). What that means you can create a table in Azure and you can put so called entities in that table. You see in the example below 4 rows (entities) and the only components that unique identify the entity, is the partition key (green column) and the row key (yellow column) together.

The partition key is a method for partitioning the data you put in that specific table. The rowkey then defines the unique id in that specific partition. The advantage of tables is it enables rapid deployment. You can scale from a few rows to millions of records in a single table.

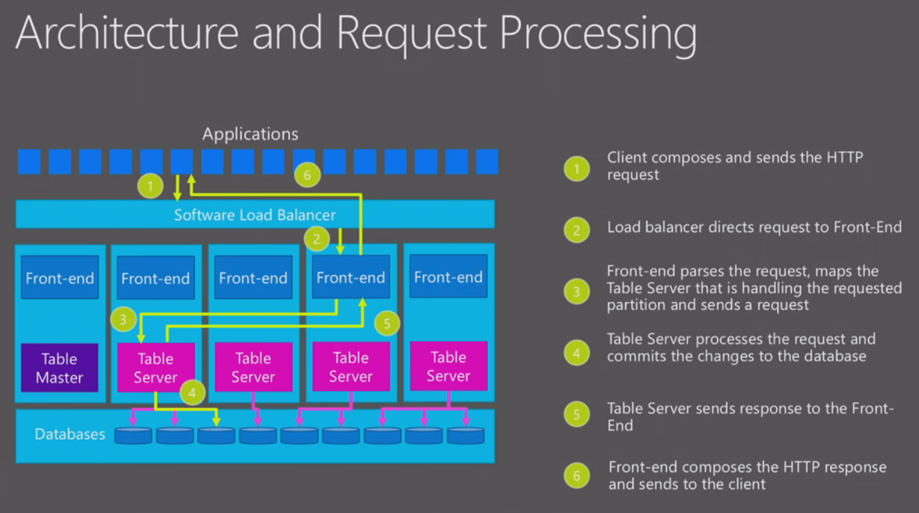

Architecture of the table service

On the bottom of the picture below you see the databases. The databases are stored on a SOFS cluster. We have on top the table server that handles request to the databases. They are responsible for managing and updating all the data. You can see them as query/transaction processors. Each one owns 1 or more connections to a database depending on the load. They can switch databases by an automatic load balance mechanism when load changes on the table servers. The table master helps the table servers coordinate the traffic. It makes sure that just 1 table server is managing just 1 database. The front-end servers are webservers in a farm that handles the requests from the application and we have on top load balancers that load balance the request from the application to the front-end farm.

Let’s look how the request processing is working in the Azure Table storage.

We have on top the application. The application is requesting data and sends the HTTP request (XML or JSON). The load balancer directs request to the front-end. The front-end parses the request, maps the table server that is handling the requested partition and sends the request. Table server then processes the request filter or/and commits changes to the database. The table server sends response back to the front-end. Front-end composes the HTTP response and sends data back to the client.

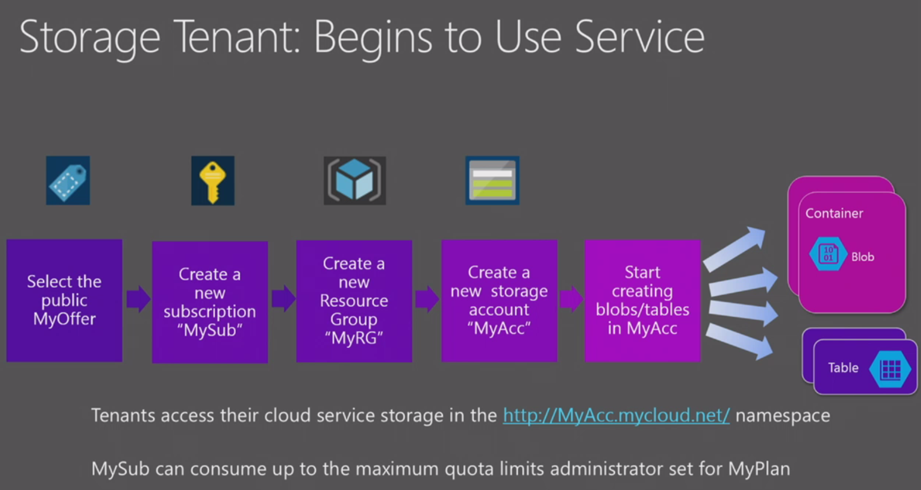

So that was the technical overview of Azure Blob and Table storage service what will be available in Azure Stack. In the last blogpost we discussed the creation of the offer that tenants consume. Lets quickly look at how the tenant will actually request and consumes this service. First the tenant selects the offer. From that offer a subscription is created with the allowed quota’s defined in the offer. The tenant then creates a resource group and in that resource group the tenant can create a new storage account. In that storage account the tenant then creates container with blob storage or creates tables. Within that storage account all data is accessible via single namespace.

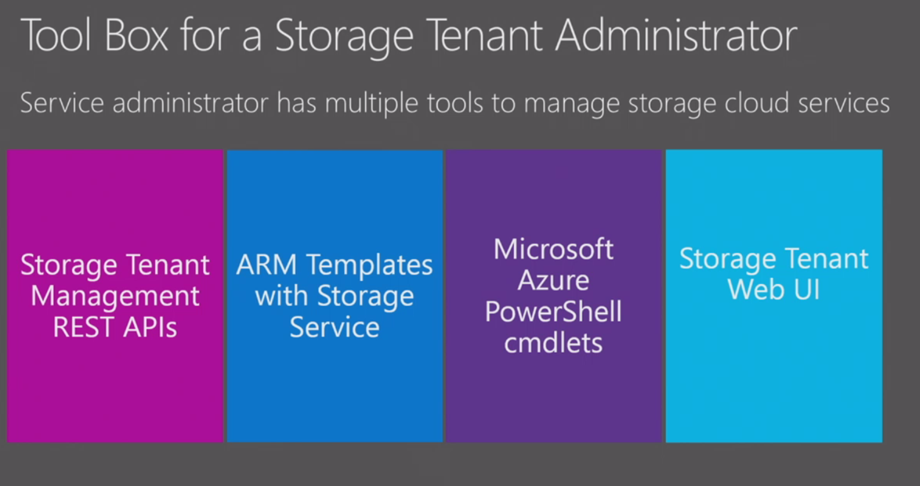

The tenant can use multiple tools for managing storage in Azure Stack. We have the Storage Tenant Management API’s where you can programmatically talk to. You can use Azure Resource Manager Templates with the storage service. You can either choose to use Azure PowerShell or AzureCLI. At last but not least the Tenant portal has the option for managing and creating storage accounts, containers and tables.

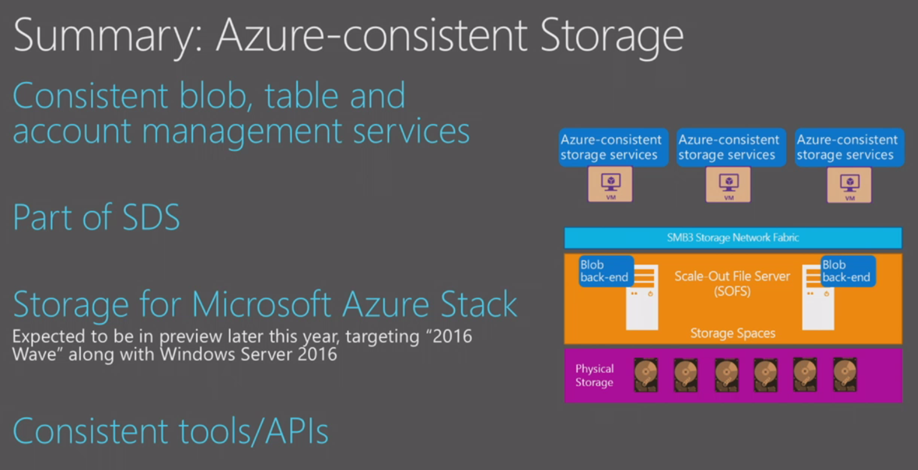

To recap these 2-part blog:

- Azure Consistent Storage is a consistent blob, table and account management service.

- It is part of the Software defined Storage

- It is THE storage for Microsoft Azure Stack.

- Uses consistent tools/APIs with Azure

I hope soon to able to play with the Azure Stack bits and start building this solution in our lab.